Executor

After you create a task dependency graph, you need to submit it to threads for execution. In this chapter, we will show you how to execute a task dependency graph.

Create an Executor

To execute a taskflow, you need to create an executor of type tf::N worker threads. The default value is std::

tf::Executor executor1; // create an executor with the number of workers // equal to std::thread::hardware_concurrency tf::Executor executor2(4); // create an executor of 4 worker threads

Understand Work Stealing in Executor

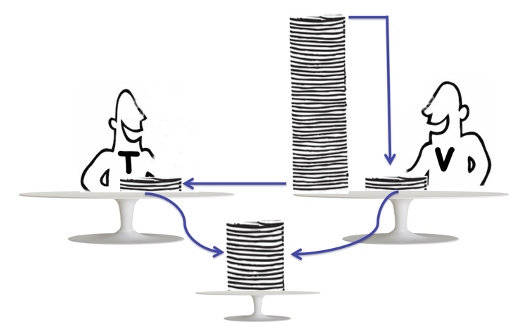

Taskflow designs a highly efficient work-stealing algorithm to schedule and run tasks in an executor. Work-stealing is a dynamic scheduling algorithm widely used in parallel computing to distribute and balance workload among multiple threads or cores. Specifically, within an executor, each worker maintains its own local queue of tasks. When a worker finishes its own tasks, instead of becoming idle or going sleep, it (thief) tries to steal a task from the queue another worker (victim). The figure below illustrates the idea of work-stealing:

The key advantage of work-stealing lies in its decentralized nature and efficiency. Most of the time, worker threads work on their local queues without contention. Stealing only occurs when a worker becomes idle, minimizing overhead associated with synchronization and task distribution. This decentralized strategy effectively balances the workload, ensuring that idle workers are put to work and that the overall computation progresses efficiently. That being said, the internal scheduling mechanisms in tf::

- Tsung-Wei Huang, Dian-Lun Lin, Chun-Xun Lin, and Yibo Lin, "Taskflow: A Lightweight Parallel and Heterogeneous Task Graph Computing System," IEEE Transactions on Parallel and Distributed Systems (TPDS), vol. 33, no. 6, pp. 1303-1320, June 2022

Execute a Taskflow

tf::run_* methods, tf::

1: // Declare an executor and a taskflow 2: tf::Executor executor; 3: tf::Taskflow taskflow; 4: 5: // Add three tasks into the taskflow 6: tf::Task A = taskflow.emplace([] () { std::cout << "This is TaskA\n"; }); 7: tf::Task B = taskflow.emplace([] () { std::cout << "This is TaskB\n"; }); 8: tf::Task C = taskflow.emplace([] () { std::cout << "This is TaskC\n"; }); 9: 10: // Build precedence between tasks 11: A.precede(B, C); 12: 13: tf::Future<void> fu = executor.run(taskflow); 14: fu.wait(); // block until the execution completes 15: 16: executor.run(taskflow, [](){ std::cout << "end of 1 run"; }).wait(); 17: executor.run_n(taskflow, 4); 18: executor.wait_for_all(); // block until all associated executions finish 19: executor.run_n(taskflow, 4, [](){ std::cout << "end of 4 runs"; }).wait(); 20: executor.run_until(taskflow, [cnt=0] () mutable { return ++cnt == 10; });

Lines 6–8 create a taskflow consisting of three tasks, A, B, and C. Lines 13–14 execute the taskflow once and block until its completion. Line 16 runs the taskflow once and registers a callback that is invoked when the execution finishes. Lines 17–18 execute the taskflow four times and use tf::

Understand the Execution Order

While it is possible to submit the same taskflow to an executor multiple times, these multiple runs will be synchronized to a sequential chain of executions in the order of their submissions. For example, the three submissions below will finish in order, where execution #1 completes before #2, and execution #2 completes before execution #3:

executor.run(taskflow); // execution #1 executor.run_n(taskflow, 10); // execution #2 executor.run(taskflow); // execution #3 executor.wait_for_all(); // execution #1 -> execution #2 -> execution #3

However, there is no deterministic order for different taskflows submitted simultaneously. For example, the three submissions below may finish in an arbitrary order, since the three taskflows are distinct:

executor.run(taskflow1); // execution 1 executor.run_n(taskflow2, 10); // execution 2 executor.run(taskflow3); // execution 3 executor.wait_for_all(); // no order guarantee among the three taskflows

Understand the Ownership

A running taskflow must remain alive for the duration of its execution because the executor does not take ownership of the taskflow. It is your responsibility to ensure that a taskflow is not destroyed while it is still running. For example, the code below can result in undefined behavior.

tf::Executor executor; // create an executor // create a taskflow whose lifetime is restricted by the block statement { tf::Taskflow taskflow; // adds tasks to the taskflow // ... // runs the taskflow once executor.run(taskflow); } // leaving the block statement will destroy taskflow while it is running, // resulting in undefined behavior

Similarly, you should avoid modifying a taskflow while it is running:

tf::Taskflow taskflow; // Add tasks into the taskflow // ... // Declare an executor tf::Executor executor; tf::Future<void> future = executor.run(taskflow); // alter the taskflow while running leads to undefined behavior taskflow.emplace([](){ std::cout << "Add a new task\n"; });

You must always keep a taskflow alive and must not modify it while it is running on an executor.

Execute a Taskflow with Transferred Ownership

You can transfer the ownership of a taskflow to an executor and run it without wrangling with the lifetime issue of that taskflow. Each run_* method discussed in the previous section comes with an overload that takes a moved taskflow object.

tf::Taskflow taskflow; tf::Executor executor; taskflow.emplace([](){}); // let the executor manage the lifetime of the submitted taskflow executor.run(std::move(taskflow)); // now taskflow has no tasks assert(taskflow.num_tasks() == 0);

However, you should avoid moving a running taskflow which can result in undefined behavior.

tf::Taskflow taskflow; tf::Executor executor; taskflow.emplace([](){}); // executor does not manage the lifetime of taskflow executor.run(taskflow); // error! you cannot move a taskflow while it is running executor.run(std::move(taskflow));

The correct way to submit a taskflow with moved ownership to an executor is to ensure all previous runs have completed. The executor will automatically release the resources of a moved taskflow right after its execution completes.

// submit the taskflow and wait until it completes executor.run(taskflow).wait(); // now it's safe to move the taskflow to the executor and run it executor.run(std::move(taskflow));

Likewise, you cannot move a taskflow that is running on an executor. You must wait until all the previous fires of runs on that taskflow complete before calling move.

// submit the taskflow and wait until it completes executor.run(taskflow).wait(); // now it's safe to move the taskflow to another tf::Taskflow moved_taskflow(std::move(taskflow));

Execute a Taskflow from an Internal Worker Cooperatively

Each run variant of tf::tf::Future::wait is called, the caller blocks without making any progress until the underlying state becomes ready. However, this blocking design can lead to potential deadlocks, particularly when multiple taskflows are launched from within the internal workers of the same executor. For example, the following code creates a taskflow of 1,000 tasks, where each task runs another taskflow of 500 tasks in a blocking manner, leading to a potential deadlock problem:

tf::Executor executor(2); tf::Taskflow taskflow; std::array<tf::Taskflow, 1000> others; for(size_t n=0; n<1000; n++) { for(size_t i=0; i<500; i++) { others[n].emplace([&](){}); } taskflow.emplace([&executor, &tf=others[n]](){ // Blocking a worker can cause deadlock if all workers are waiting // for their taskflows to complete without making any progress executor.run(tf).wait(); }); } executor.run(taskflow).wait();

To avoid this deadlock issue, tf::

tf::Executor executor(2); tf::Taskflow taskflow; std::array<tf::Taskflow, 1000> others; std::atomic<size_t> counter{0}; for(size_t n=0; n<1000; n++) { for(size_t i=0; i<500; i++) { others[n].emplace([&](){ counter++; }); } taskflow.emplace([&executor, &tf=others[n]](){ // calling worker coruns the taskflow cooperatively with other workers executor.corun(tf); }); } executor.run(taskflow).wait();

Similar to tf::

taskflow.emplace([&](){ auto fu = std::async([](){ std::sleep(100s); }); executor.corun_until([](){ return fu.wait_for(std::chrono::seconds(0)) == future_status::ready; }); });

Thread Safety of Executor

All run_* methods of tf::

tf::Executor executor; std::array<tf::Taskflow, 10> taskflows; for(int i=0; i<10; ++i) { std::thread([i, &](){ executor.run(taskflows[i]); // thread i runs taskflow i }).detach(); } executor.wait_for_all();

Query the Worker ID

Each worker thread in a tf::[0, N), where N is the number of worker threads in the executor. You can query the identifier of the calling thread using tf::

std::vector<int> data[8]; // worker-specific data vector tf::Taskflow taskflow; tf::Executor executor(8); // an executor of eight workers assert(executor.this_worker_id() == -1); // main thread is not a worker taskflow.emplace([&](){ int id = executor.this_worker_id(); // in the range [0, 8) auto& vec = data[id]; // worker id process data[id] });

Observe Thread Activities

You can observe thread activities in an executor when a worker thread participates in executing a task and leaves the execution using tf::

class ObserverInterface { virtual ~ObserverInterface() = default; virtual void set_up(size_t num_workers) = 0; virtual void on_entry(tf::WorkerView worker_view, tf::TaskView task_view) = 0; virtual void on_exit(tf::WorkerView worker_view, tf::TaskView task_view) = 0; };

There are three methods you must define in your derived class, tf::

You can associate an executor with one or multiple observers using tf::

#include <taskflow/taskflow.hpp> struct MyObserver : public tf::ObserverInterface { MyObserver(const std::string& name) { std::cout << "constructing observer " << name << '\n'; } void set_up(size_t num_workers) override final { std::cout << "setting up observer with " << num_workers << " workers\n"; } void on_entry(tf::WorkerView w, tf::TaskView tv) override final { std::ostringstream oss; oss << "worker " << w.id() << " ready to run " << tv.name() << '\n'; std::cout << oss.str(); } void on_exit(tf::WorkerView w, tf::TaskView tv) override final { std::ostringstream oss; oss << "worker " << w.id() << " finished running " << tv.name() << '\n'; std::cout << oss.str(); } }; int main(){ tf::Executor executor(4); // Create a taskflow of eight tasks tf::Taskflow taskflow; auto A = taskflow.emplace([] () { std::cout << "1\n"; }).name("A"); auto B = taskflow.emplace([] () { std::cout << "2\n"; }).name("B"); auto C = taskflow.emplace([] () { std::cout << "3\n"; }).name("C"); auto D = taskflow.emplace([] () { std::cout << "4\n"; }).name("D"); auto E = taskflow.emplace([] () { std::cout << "5\n"; }).name("E"); auto F = taskflow.emplace([] () { std::cout << "6\n"; }).name("F"); auto G = taskflow.emplace([] () { std::cout << "7\n"; }).name("G"); auto H = taskflow.emplace([] () { std::cout << "8\n"; }).name("H"); // create an observer std::shared_ptr<MyObserver> observer = executor.make_observer<MyObserver>( "MyObserver" ); // run the taskflow executor.run(taskflow).get(); // remove the observer (optional) executor.remove_observer(std::move(observer)); return 0; }

The above code produces the following output:

constructing observer MyObserver setting up observer with 4 workers worker 2 ready to run A 1 worker 2 finished running A worker 2 ready to run B 2 worker 1 ready to run C worker 2 finished running B 3 worker 2 ready to run D worker 3 ready to run E worker 1 finished running C 4 5 worker 1 ready to run F worker 2 finished running D worker 3 finished running E 6 worker 2 ready to run G worker 3 ready to run H worker 1 finished running F 7 8 worker 2 finished running G worker 3 finished running H

It is expected each line of std::

Modify Worker Property

You can change the property of each worker thread from its executor, such as assigning thread-processor affinity before the worker enters the scheduler loop and post-processing additional information after the worker leaves the scheduler loop, by passing an instance derived from tf::

// affine the given thread to the given core index (linux-specific) bool affine(std::thread& thread, unsigned int core_id) { cpu_set_t cpuset; CPU_ZERO(&cpuset); CPU_SET(core_id, &cpuset); pthread_t native_handle = thread.native_handle(); return pthread_setaffinity_np(native_handle, sizeof(cpu_set_t), &cpuset) == 0; } class CustomWorkerBehavior : public tf::WorkerInterface { public: // to call before the worker enters the scheduling loop void scheduler_prologue(tf::Worker& w) override { printf("worker %lu prepares to enter the work-stealing loop\n", w.id()); // now affine the worker to a particular CPU core equal to its id if(affine(w.thread(), w.id())) { printf("successfully affines worker %lu to CPU core %lu\n", w.id(), w.id()); } else { printf("failed to affine worker %lu to CPU core %lu\n", w.id(), w.id()); } } // to call after the worker leaves the scheduling loop void scheduler_epilogue(tf::Worker& w, std::exception_ptr) override { printf("worker %lu left the work-stealing loop\n", w.id()); } }; int main() { tf::Executor executor(4, tf::make_worker_interface<CustomWorkerBehavior>()); return 0; }

When running the program, we see the following one possible output:

worker 3 prepares to enter the work-stealing loop successfully affines worker 3 to CPU core 3 worker 3 left the work-stealing loop worker 0 prepares to enter the work-stealing loop successfully affines worker 0 to CPU core 0 worker 0 left the work-stealing loop worker 1 prepares to enter the work-stealing loop worker 2 prepares to enter the work-stealing loop successfully affines worker 1 to CPU core 1 worker 1 left the work-stealing loop successfully affines worker 2 to CPU core 2 worker 2 left the work-stealing loop

When you create an executor, it spawns a set of worker threads to run tasks using a work-stealing scheduling algorithm. The execution logic of the scheduler and its interaction with each spawned worker via tf::

for(size_t n=0; n<num_workers; n++) { create_thread([](Worker& worker){ // enter the scheduling loop // Here, WorkerInterface::scheduler_prologue is invoked, if any worker_interface->scheduler_prologue(worker); try { while(1) { perform_work_stealing_algorithm(); if(stop) { break; } } } catch(...) { exception_ptr = std::current_exception(); } // leaves the scheduling loop and joins this worker thread // Here, WorkerInterface::scheduler_epilogue is invoked, if any worker_interface->scheduler_epilogue(worker, exception_ptr); }); }